WhoDB with Offline AI

The Last Database Diagram You’ll Ever Draw by Hand

Outdated documentation is one of the quietest productivity drains in software teams.

That carefully crafted architecture diagram from a few sprints ago? It was once the “single source of truth.” Today, it’s a misleading snapshot of how things used to be.

A changed foreign key here, a new service there, and suddenly, those diagrams and schema maps turn from helpful to harmful. Every developer has hit that moment: debugging a mysterious issue only to discover the docs were wrong.

This pain point is exactly what inspired WhoDB .

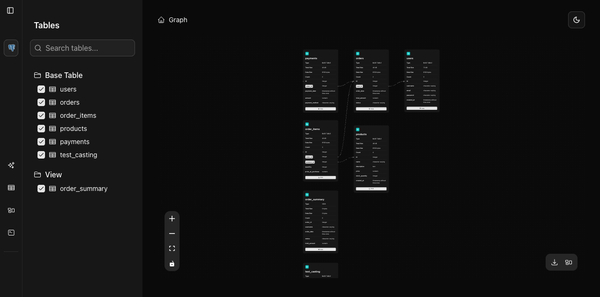

What if diagrams weren’t static artifacts, but live, dynamic answers * generated directly from your database?

Imagine asking:

“Show me how the users table connects to orders and payments.”

…and instantly getting an up-to-date, accurate entity diagram.

That’s no longer a thought experiment, it is now reality.

Dynamic Diagrams, Now Powered by Local AI

Turning natural language into SQL and then into clean visuals requires powerful language models. But most solutions send your schema AND data to cloud APIs.

That’s a non-starter for teams that take security, privacy, and data protection seriously.

Your data is your intellectual property so it should never leave your environment. That is why we are introducing first-class Ollama support in WhoDB.

Now, you can run models like Llama 3 or Mistral entirely offline and integrate them seamlessly with WhoDB.

Everything happens locally so no external calls, no data exposure, no compromises.

How It Works

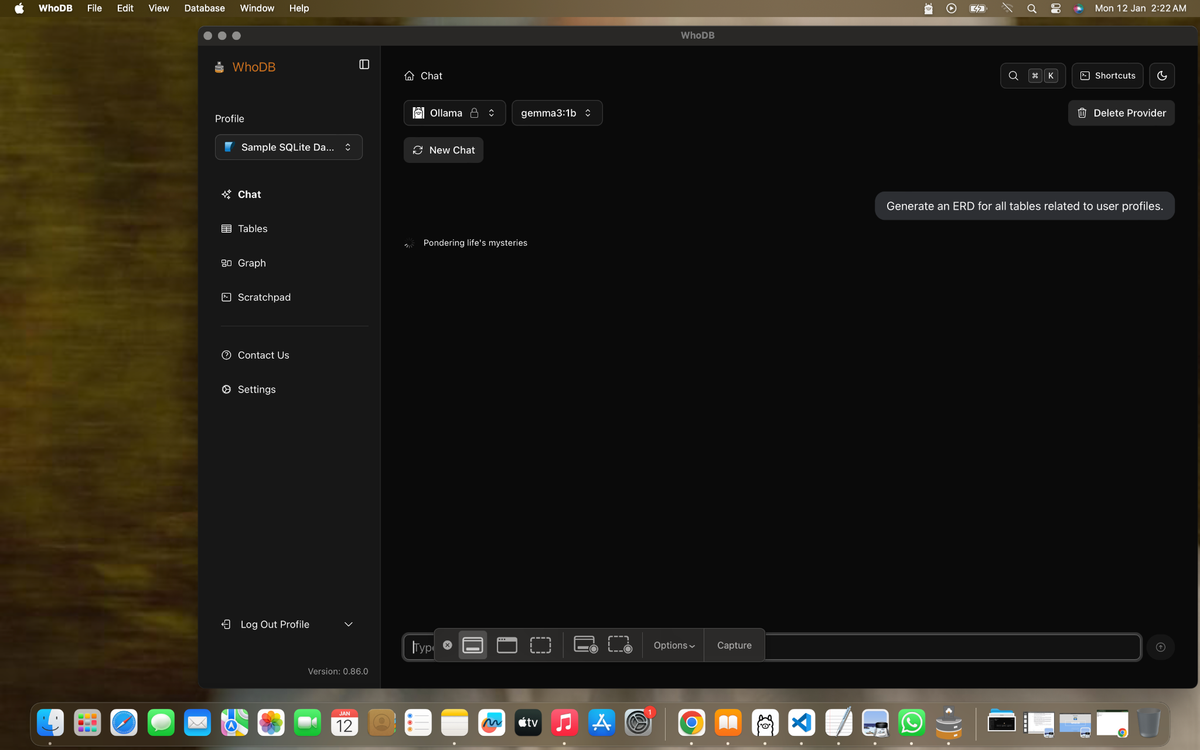

Run your preferred LLM locally using Ollama .

- In WhoDB , ask a question:

“Generate an ERD for all tables related to user profiles.”

- The prompt is sent to your local Ollama instance, never to the cloud.

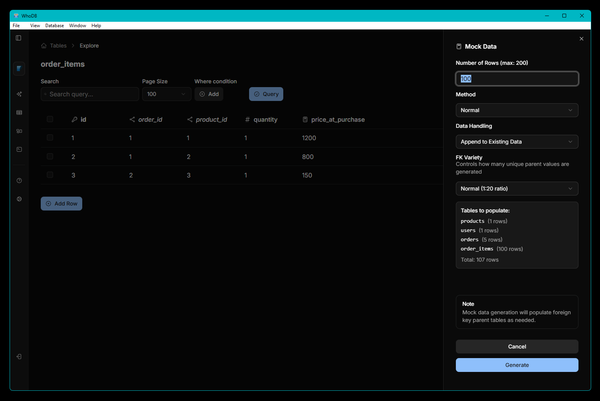

- Ollama generates the SQL, WhoDB runs it, and instantly visualizes the result as a Mermaid diagram.

The result? A real-time, accurate schema view that stays private, fast, and always up-to-date.

Why It Matters

This update isn’t just a new feature, it's part of a larger shift toward local-first AI. Developers shouldn’t have to choose between privacy and innovation.

With Ollama integration, WhoDB delivers:

- Zero data leaks so your schema stays local.

- Instant, accurate diagrams always in sync with your database.

- Seamless AI assistance without cloud dependencies.

We’re already working on expanding local AI support with integrations like LM Studio and more open-source runtimes. Local AI is the future of developer tools.

With WhoDB, you can visualize, query, and explore your data entirely offline.

Try it out and see what the next generation of private, AI-powered database tools feels like.

👉 WhoDB